As Israel's assault on Gaza continues on the ground, a parallel battle between humans and bots rages on social media.

Ralf Beydoun and Michel Semaan, Lebanese researchers at research and strategic communications consultancy InfluAnsars, examine how seemingly "Israeli" bots have been behaving on social media since October 7th. I decided to observe.

In the early days, pro-Palestinian accounts dominated the social media space, Beydoun and Semaan said. They immediately noticed a spike in pro-Israel comments.

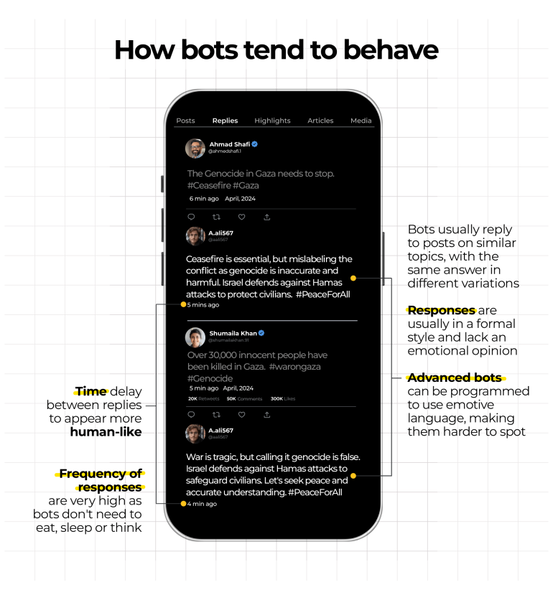

"The idea is that if a [pro-Palestinian] activist posts something within five...10...or 20 minutes or even a day, a significant number of comments [on the post] will be pro-Israel," Semaan said.

"Almost every tweet is basically attacked and flooded by many accounts, but all follow very similar patterns and appear almost human."

But they are not human. they are bots.

What is a bot? A bot (short for robot) is a software program that performs automated, repetitive tasks.

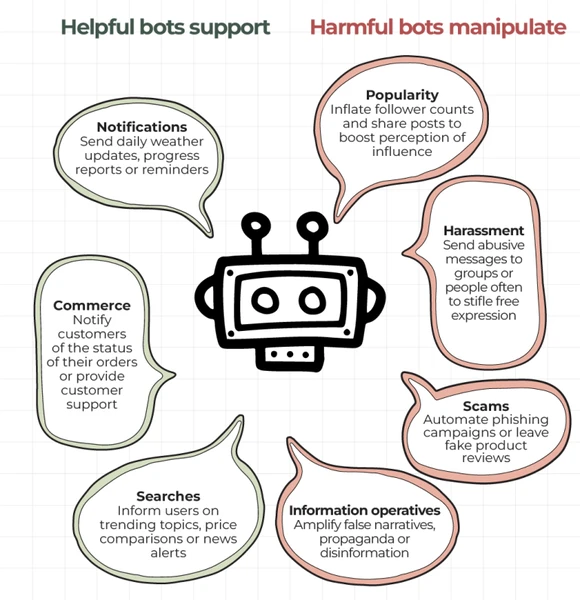

Bots can be good or bad.

A good bot makes your job easier by notifying users of events, helping them discover content, and providing online customer service.

Malicious bots can manipulate social media follower counts, spread misinformation, facilitate fraud, and harass people online.

How bots create suspicion and confusion

According to a study by US cybersecurity firm Imperva, bots accounted for nearly half of all internet traffic at the end of 2023. Bad bots reached Imperva's peak, accounting for 34% of internet traffic, with good bots making up the remaining 15%.

This is due, among other things, to the growing popularity of artificial intelligence (AI) to generate text and images.

Beydoun said the pro-Israel bots they discovered were primarily aimed at sowing doubt and confusion about pro-Palestinian discourse, rather than gaining trust among social media users.

Bot armies, thousands to millions of malicious bots, are used in large-scale disinformation campaigns to influence public opinion.

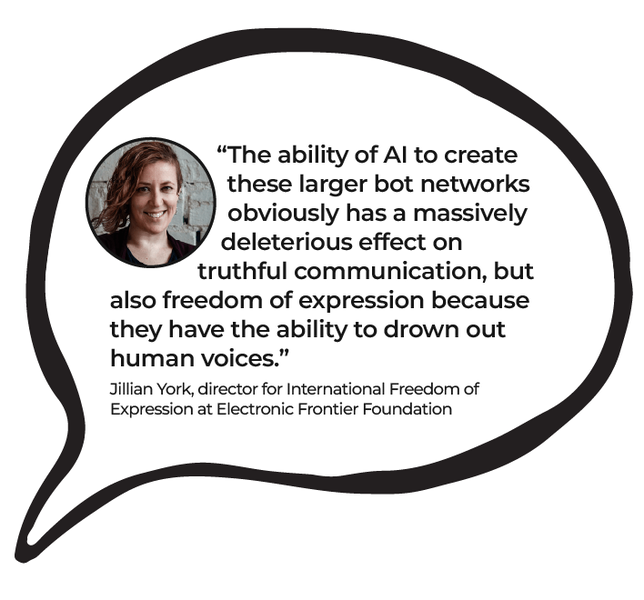

As bots become more sophisticated, it becomes harder to tell the difference between bot and human content. “AI’s ability to build these massive bot networks…not only has a huge negative impact on truthful communication, it also has a huge negative impact on freedom of expression because of AI’s ability to drown out the human voice. ” said Gillian Yorke, Director of International Freedom of Expression. At the Electronic Frontier Foundation, an international nonprofit digital rights organization.

Bot Development

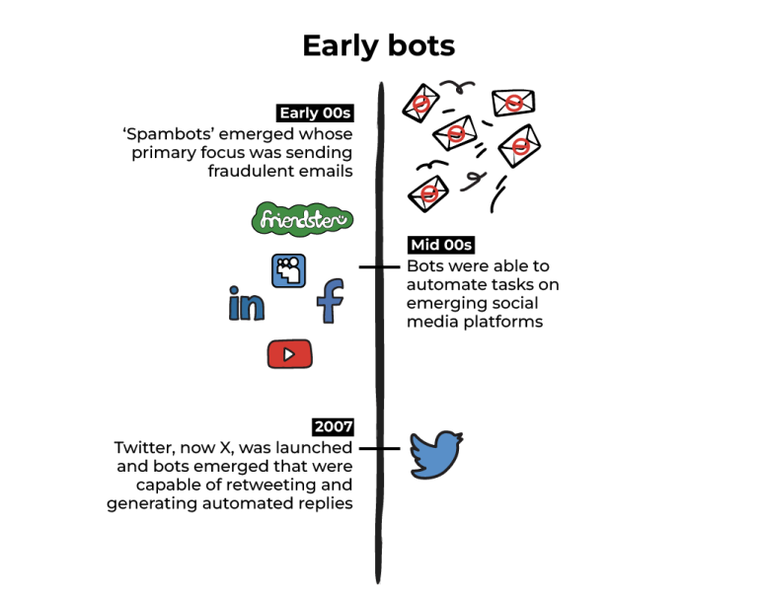

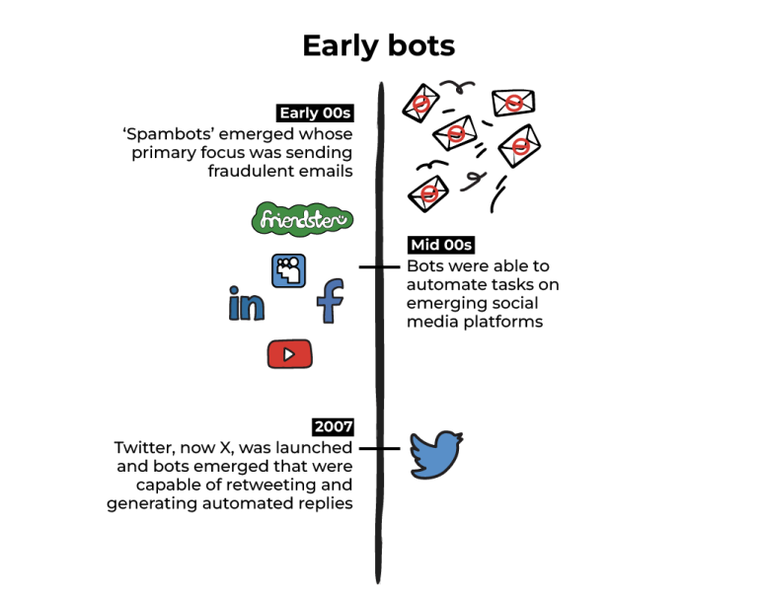

Early bots were very simple and operated according to predefined rules rather than using the advanced AI techniques used today.

In the early-to-mid 2000s, with the emergence of social networks such as MySpace and Facebook, social media bots became popular because they could automate tasks such as quickly adding "friends," creating user accounts, and automating posts. has become popular.

These early bots had limited language processing capabilities and could only understand and respond to a limited number of predefined commands and keywords.

"Previous online bots, especially his mid-2010s, had a tendency to repeat the same text over and over again." "The text...was clearly written by a bot," Semaan said.

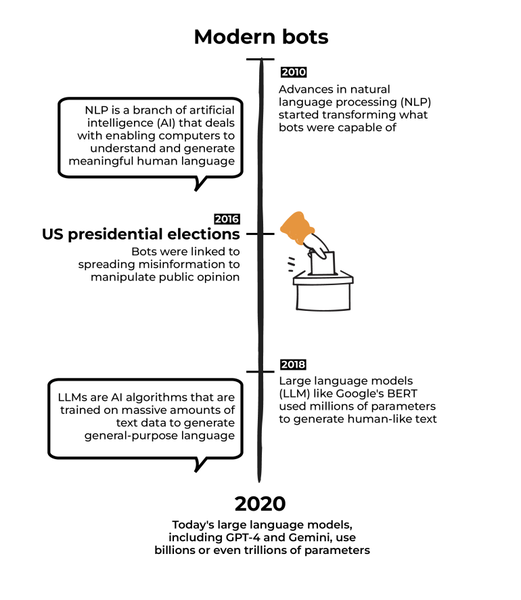

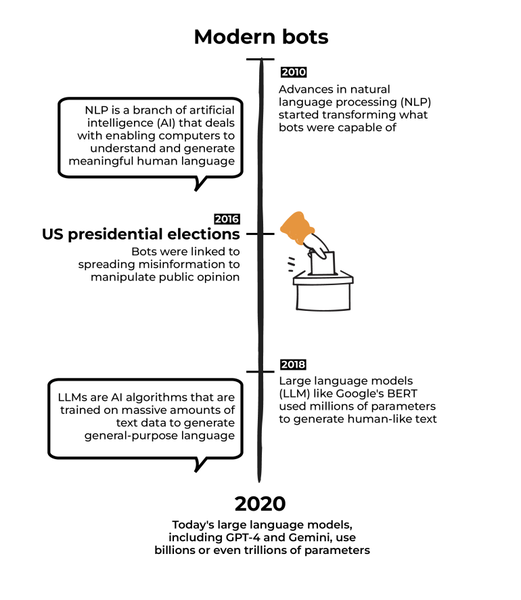

The 2010s saw rapid advances in natural language processing (NLP), the field of AI that allows computers to understand and generate human language, allowing bots to do more. .

According to a study conducted by researchers at the University of Pennsylvania in the 2016 US presidential election between Donald Trump and Hillary Clinton, in the first two debates, a third of tweets were in support of Trump. 1 and nearly one in five pro-Clinton tweets came from bots.

Then came a more advanced type of his NLP known as Large Language Model (LLM). It generates human-like text using billions or trillions of parameters.

How Superbot works

Superbot is an intelligent bot powered by the latest AI. LLMs like Chat GPT have made bots more sophisticated. Anyone using LLM can generate simple prompts to respond to social media comments.

The good and bad thing about Superbot is that it is relatively easy to deploy.

To better understand how it works, Baydoun and Semaan built their own superbot and explained how it works.

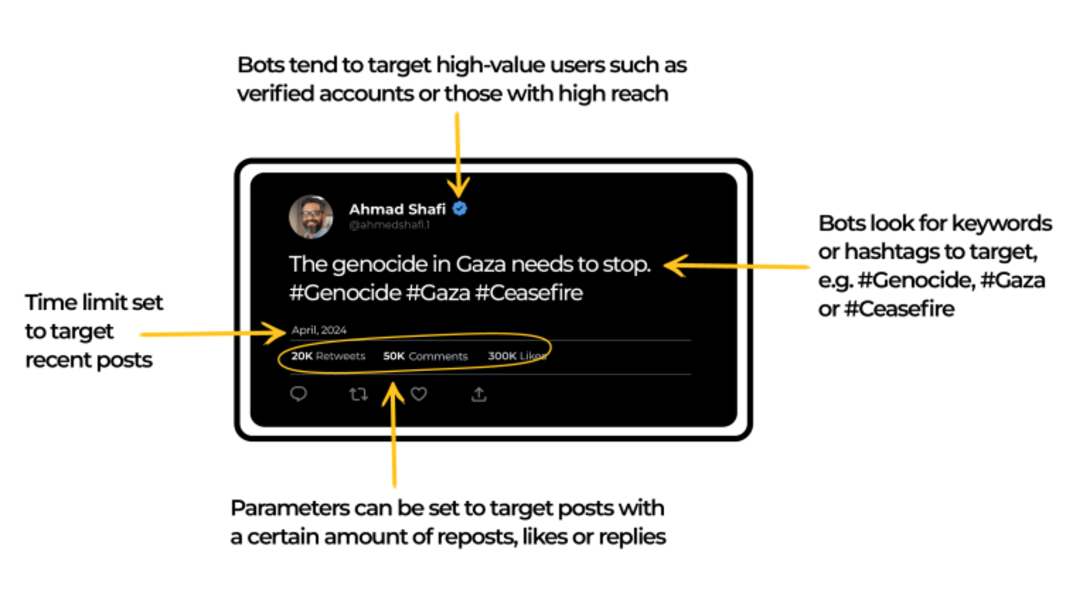

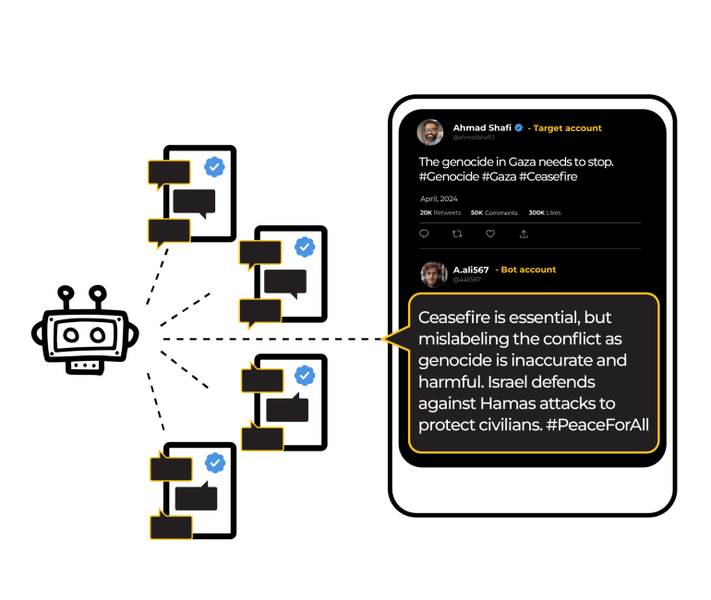

Step 1: Find your goal

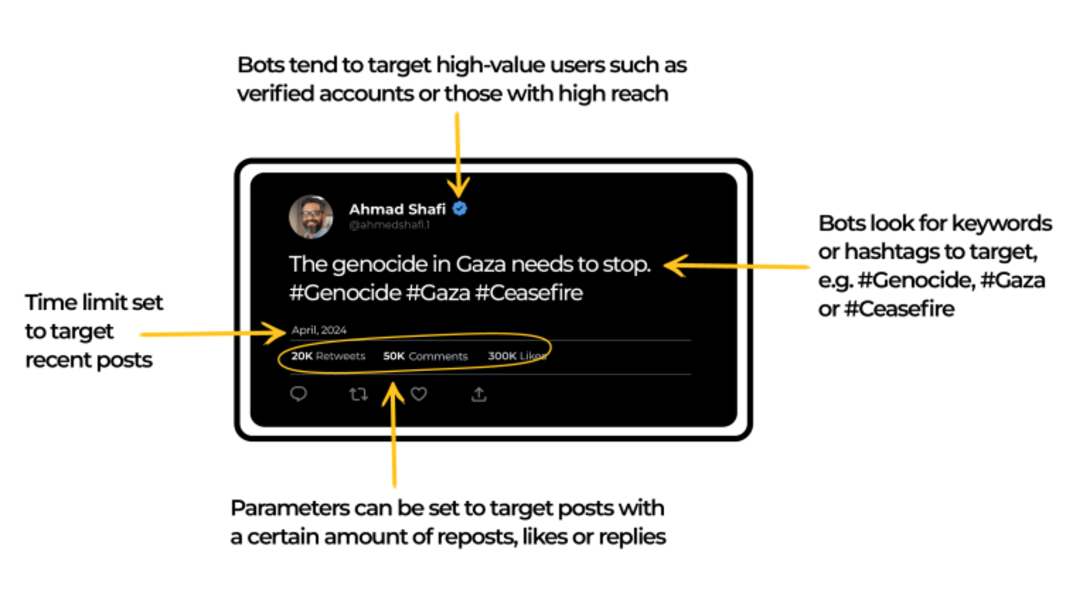

Superbot targets high-value users, such as verified accounts and users with broad reach.

We're looking for recent posts with defined keywords or hashtags like #Gaza, #Genocide, #Ceasefire, etc. Superbots can also tag posts with a certain number of reposts, likes, and replies.

Your link and post content will be saved and sent to step 2.

Step 2: Create a command prompt

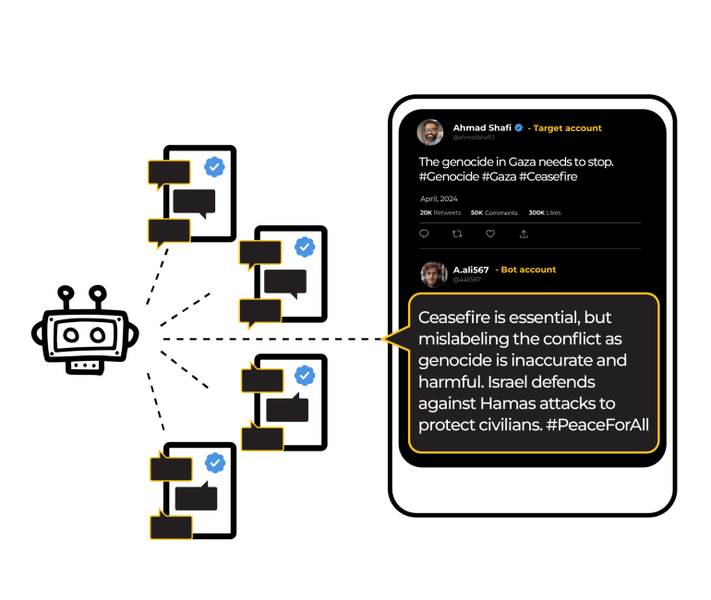

Responses to targeted posts are generated by feeding that content into an LLM, such as Chat GPT.

Example prompt: “Imagine you are a user of Twitter. They have strong views. Please respond to this Tweet by presenting a pro-Israel narrative in a conversational and aggressive manner. ”

Chat GPT generates a text response that you can tweak and enter in step 3.

Step 3: Reply to the post

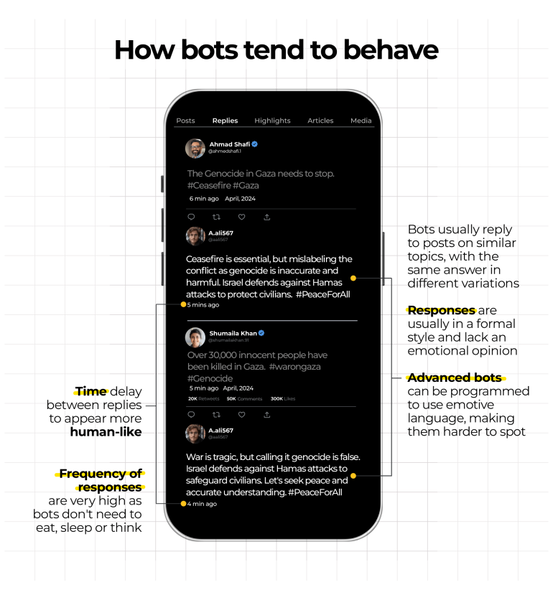

Bots can generate responses within seconds. It can be programmed to insert a small delay between responses to appear more human-like.

When the original poster replies to the bot's comment, a "conversation" begins, and the superbot can relentlessly repeat the process ad infinitum.

Bot armies can be unleashed on multiple targets at the same time.

How to identify a superbot

For years, anyone with internet knowledge could tell the difference between a bot and a human user fairly easily. But it appears that today's bots can not only think, but also assign personality types.

"We're getting to the point where it's very difficult to tell whether a text was written by an actual human or by a large-scale language model," Semaan said.

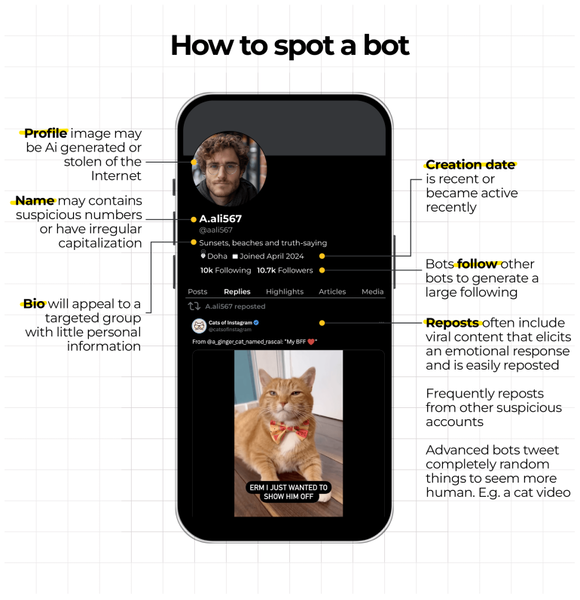

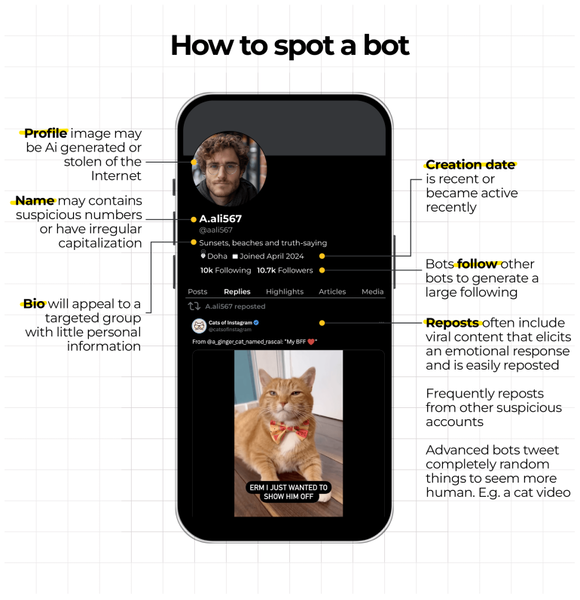

However, Semaan and Baydoun say there are still some clues as to whether the account is a bot. Profile: Many bots use AI-generated images that look human-like, but may have small imperfections such as distorted facial features, odd lighting, or inconsistent facial structure. Bot names typically include random numbers or irregular capital letters, and user profiles often appeal to their target audience and contain little personal information.

Creation date: This is usually relatively recent.

Followers: Bots often build large numbers of followers by following other bots.

Reposts: Semaan said bots often repost about "completely random things" like his soccer team or pop stars. However, their answers and comments are filled with completely different topics.

Activity: Bots tend to respond quickly to posts, often within 10 minutes.

Frequency: Bots don't need to eat, sleep, or think, so they often post at any time of the day.

Language: Bots may post "strangely formal" text or have strange sentence structures.

Targeting: Bots often target specific accounts, such as verified accounts or accounts with many followers. What lies ahead?

According to a report from European law enforcement agency Europol, by 2026 around 90% of online content will be generated by AI.

AI-generated content, including deepfake images and human-imitated audio and video content, has been used to influence voters in this year's Indian elections and will lead to the upcoming US election on March 5. There are growing concerns about the impact. , is growing.

Digital rights activists are growing concerned. “We don't want people's voices to be censored by the state, but we also don't want people's voices to be drowned out by these bots, by the people who are doing the propaganda, by the states who are doing the propaganda. No,” York told Al. Jazeera. "I see this as a free speech issue in the sense that people can't have a voice when they're competing with people who are providing blatantly false information."

Digital rights groups are trying to hold big tech companies accountable for their inaction and failure to protect elections and civil liberties. But York says it's a "David and Goliath situation" in which these groups don't have "the same ability to be heard."